Author: Panagiotis Krokidas | NCSR “Demokritos”

You’ve probably heard of high-performance computers (HPCs)—those massive machines filling entire rooms with racks of processors. They’ve long been the workhorses of science, tackling problems so complex that a single error could change the outcome, from climate models to protein folding. And just when that seems unbeatable, along come quantum computers—machines that don’t just crunch numbers but play by quantum rules.

So, are quantum computers here to dethrone HPC, or to join forces? In this article, we’ll take a quick trip through HPC’s history and see how classical and quantum technologies are transforming drug discovery and materials science. Finally, we’ll look at how the NOUS project is helping build the infrastructure for this new era of scientific computing.

When was HPC born?

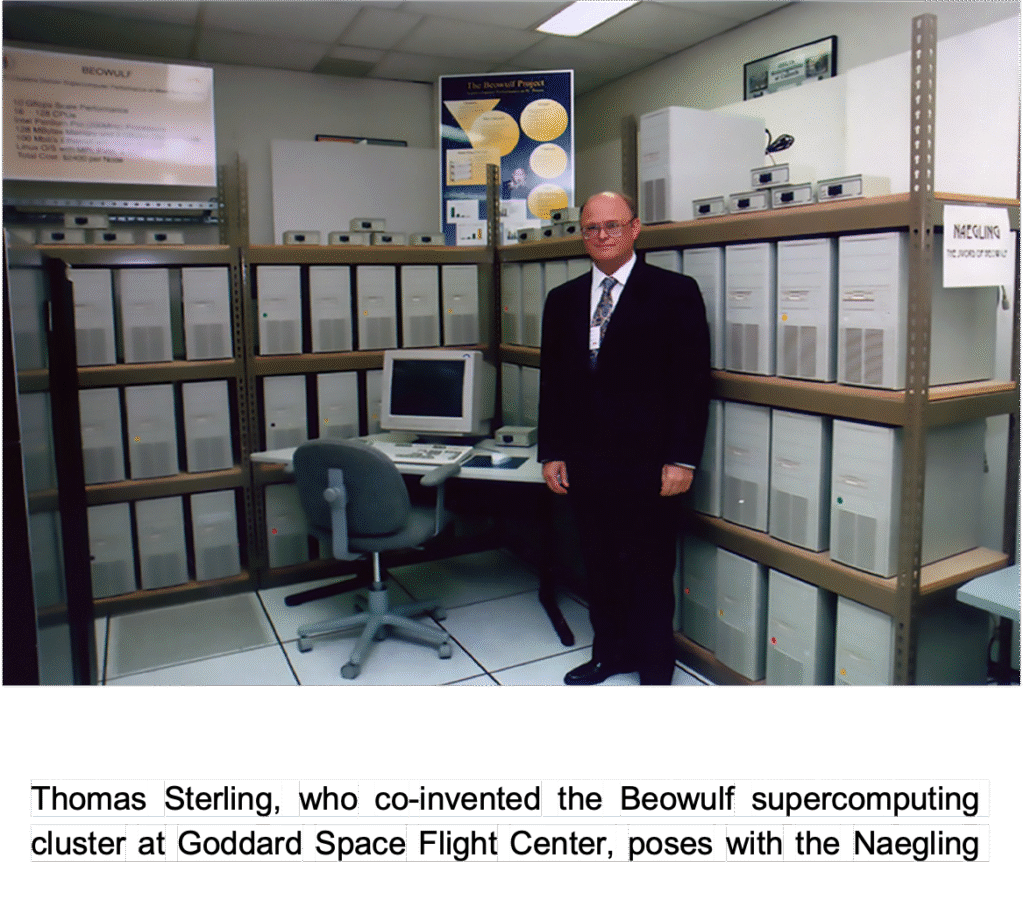

High-performance computing (HPC) rests on a simple idea: don’t solve problems step by step—solve them in parallel across many processors. That concept took shape in the 1980s and hit a milestone in 1994, when NASA’s Thomas Sterling and Donald Becker built “Beowulf,” a powerful cluster made from cheap, off-the-shelf computers.1

It showed that scalable, high-performance systems didn’t need exotic hardware. The next leap came in the mid-2000s,2 when GPUs—once for gaming—were repurposed for scientific work, offering huge speedups. Today, thanks to GPUs, NVIDIA has become a central force in AI and HPC alike.3

Scientific HPC

HPC sits at the core of today’s toughest scientific challenges—designing new drugs, creating advanced materials, modeling climate, and uncovering patterns in massive datasets. It even powers the training of language models that can chat with us naturally. Scientific computing has always been HPC’s driving force: from simulating molecular interactions to modeling stars and turbulent flows, supercomputers remain essential engines of discovery and innovation

New drugs, new materials and the mysteries of proteins

Designing drugs, chemicals, and advanced materials means working at the molecular scale, where only HPC can handle the sheer complexity of simulations. These tools let scientists uncover hidden mechanisms, optimize performance, and guide molecular design.

A striking case is Pfizer, which used HPC to virtually screen millions of compounds and rapidly identify candidates for its COVID-19 antiviral pill, Paxlovid.4 HPC also enabled molecular dynamics simulations to fine-tune vaccine components—testing lipid nanoparticle formulations to reduce allergic reactions and improve stability for transport. Even manufacturing was optimized through HPC-based fluid dynamics simulations.

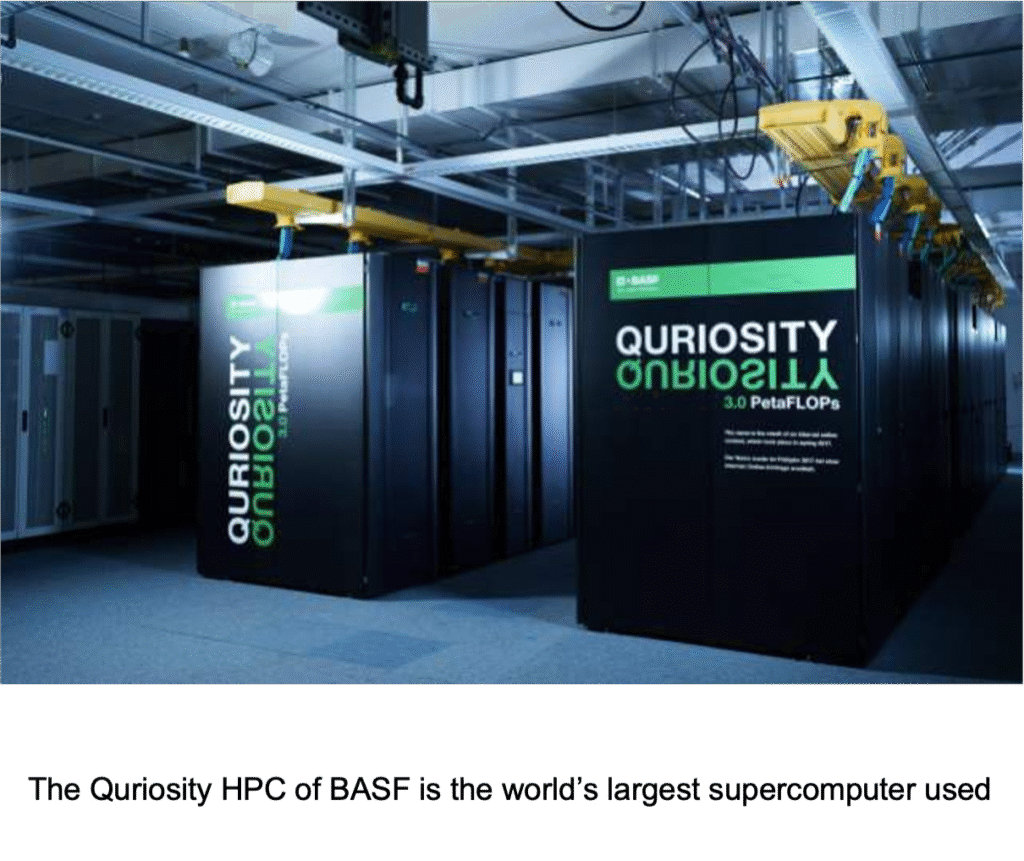

Materials research tells a similar story. SES AI teamed with NVIDIA to screen ~121 million molecules for better electrolytes using the GPU-powered ALCHEMI platform, compressing decades of battery research into months.5 BASF runs “Quriosity,” the world’s largest chemical research supercomputer, to model reactions and narrow hundreds of millions of configurations down to the top ~200 candidates.6 And Shell’s HPC models span from nanoscale electrochemistry to full battery packs, helping develop patented cooling fluids now moving toward commercialization.7,8

The Role of Quantum Computing

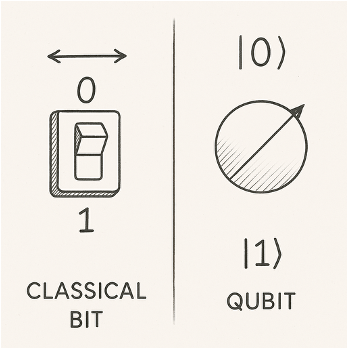

Unlike classical bits—the 1s and 0s that power ordinary computers—qubits can be both 1 and 0 at the same time (a property called superposition). Even more intriguingly, qubits can be linked so that the state of one instantly affects another, no matter the distance (this is known as entanglement). These features let quantum computers explore many possibilities at once, making them especially promising for chemistry and materials science. Most experts expect a hybrid future, where HPC does the heavy lifting and quantum processors tackle the trickiest parts.

For now, quantum hardware is still in its infancy. Current NISQ devices have only a few hundred qubits and suffer from errors, so most applications rely on hybrid approaches where HPC runs most of the workflow and quantum processors handle niche steps. This model echoes GPUs as co-processors: offloading specific tasks to a Quantum Processing Unit (QPU).9 Early examples include variational quantum algorithms for drug discovery and a Moderna–Yale–NVIDIA study using CUDA-Q to simulate quantum neural networks for predicting molecular properties.10

Industry pilots show the promise of hybrid approaches. Hyundai and IonQ use the Variational Quantum Eigensolver to model lithium reactions in next-generation batteries.11 AstraZeneca, working with IonQ, AWS, and NVIDIA, demonstrated a hybrid simulation of a key drug synthesis reaction on IonQ’s Forte QPU, achieving over 20× faster results while maintaining accuracy—the most advanced chemical simulation yet on quantum hardware.12

Looking Ahead: NOUS and the Future of Scientific Computing

As HPC continues to evolve—and quantum computing matures—the need for flexible, integrated infrastructure becomes more urgent. This is precisely the vision behind the NOUS project: to create a unified European platform that seamlessly connects classical HPC, quantum processors, and cloud-edge resources. NOUS is not just about raw compute power; it’s about orchestrating complex scientific workflows across the best-suited resources, whether that’s a supercomputer, a GPU cluster, or a future quantum accelerator.

Sources